The Cloud-Based GPU-Sourcing Network of io.net Now Supports Apple Chips

2 min read

Apple silicon chip technology will be integrated into the recently created decentralized physical infrastructure network (DePIN) io.net for its machine learning (ML) and artificial intelligence (AI) applications.

In order to support ML and AI computation, Io.net has created a decentralized network based in Solana that sources graphics processing unit (GPU) computing capacity from geographically dispersed data centers, cryptocurrency miners, and decentralized storage providers.

The business, which had recently struck a cooperation with Render Network, announced the introduction of its beta platform during the Solana Breakpoint conference in Amsterdam in November 2023.

With its most recent update, Io.net claims to be the first cloud service to offer machine learning applications using Apple silicon chip clustering. For ML and AI computing, engineers can cluster Apple chips from any location in the world.

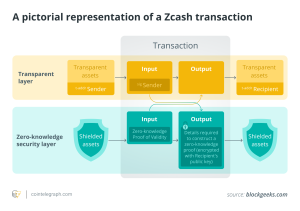

Tory Green, the chief operational officer of io.net, claims that Solana’s infrastructure is specifically designed to handle the volume of transactions and inferences that io.net will enable. In order to leverage the hardware, the infrastructure gathers GPU processing power in clusters through hundreds of inferences and related microtransactions.

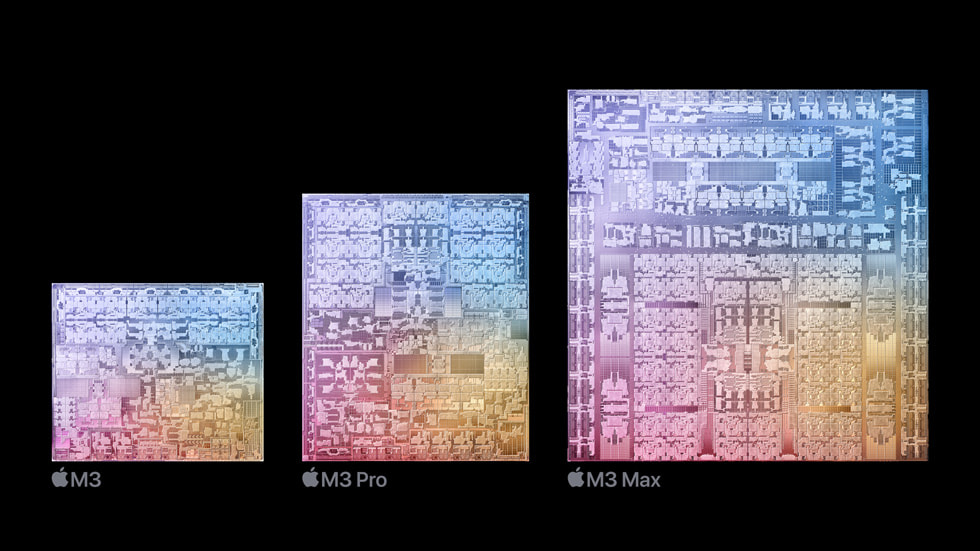

Users of io.net can now contribute processing power from a variety of Apple Silicon chips thanks to the update. The M1, M1 Max, M1 Pro, and M1 Ultra; M2, M2 Max, M2 Pro, and M2 Ultra; and M3, M3 Max, and M3 Pro models are all included in this broad range.

Tory Green, the chief operational officer of io.net, claims that Solana’s infrastructure is specifically designed to handle the volume of transactions and inferences that io.net will enable. In order to leverage the hardware, the infrastructure gathers GPU processing power in clusters through hundreds of inferences and related microtransactions.

Users of io.net can now contribute processing power from a variety of Apple Silicon chips thanks to the update. The M1, M1 Max, M1 Pro, and M1 Ultra; M2, M2 Max, M2 Pro, and M2 Ultra; and M3, M3 Max, and M3 Pro models are all included in this broad range.

The 128 megabyte memory architecture of Apple’s M3 chips outperforms the capabilities of Nvidia’s flagship A100-80 GB graphics cards, according to Io.net. Io.net further states that Apple’s M3 CPUs have a 60% quicker upgraded neural engine than its M1 counterpart.

The chips’ unified memory architecture also makes them suitable for model inference, which involves putting real-time data through an AI model to solve problems or provide predictions. The incorporation of Apple chip compatibility, according to Io.net founder Ahmad Shadid, may enable hardware to keep up with the increasing demand for AI and ML processing resources:

“This paves the way for millions of Apple users to earn rewards for contributing to the AI revolution and is a massive step forward in democratizing access to powerful computing resources.”

Millions of users of Apple products can now contribute more chips and processing power for AI and ML use cases thanks to the addition of hardware support.